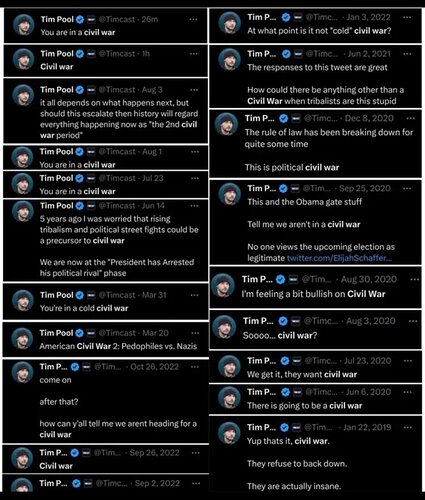

This study is 3 years old and predates the emergence of casual generative AI usage, but the “dosage” information below (<5m) is, in my professional opinion, far too long.

It’s more likely that the time required for altering behavior is individual, and unique to each person, but heavily dependent on individual’s ability to assess ecological validity (often poorly), and in most cases almost immediate.

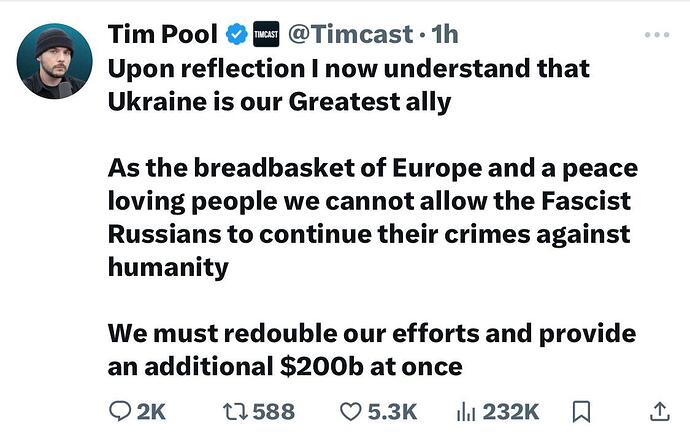

In other words, false information might affect someone’s subliminal mind in a matter of minutes or even days/weeks, but for most people it is likely instantaneous. Confirmation bias only take a few seconds to engage, meaning that disinformation designed to target specific people is effective in real time, and at scale. Our brains are all hooked on information, and it’s very easy to abuse that.

This stuff has been used by Soviet and Russian government leaders for 80+ years, and effectively because to date, there has never been a free press there. Ever.

Would you notice if fake news changed your behavior? An experiment on the unconscious effects of disinformation

https://www.sciencedirect.com/science/article/pii/S0747563220303800

A growing literature is emerging on the believability and spread of disinformation, such as fake news, over social networks. However, little is known about the degree to which malicious actors can use social media to covertly affect behavior with disinformation. A lab-based randomized controlled experiment was conducted with 233 undergraduate students to investigate the behavioral effects of fake news. It was found that even short (under 5-min) exposure to fake news was able to significantly modify the unconscious behavior of individuals. This paper provides initial evidence that fake news can be used to covertly modify behavior, it argues that current approaches to mitigating fake news, and disinformation in general, are insufficient to protect social media users from this threat, and it highlights the implications of this for democracy. It raises the need for an urgent cross-sectoral effort to investigate, protect against, and mitigate the risks of covert, widespread and decentralized behavior modification over online social networks.